The following is a Q&A in which CET&D quizzed the AI software about the potential dangers of the technology and the need for global standards around AI.

The detailed responses the AI was able to provide near instantaneously were impressive to behold. But it was also clear that while the responses were detailed, their content was largely unoriginal. In some ways it felt like talking to a middle manager at a giant corporation, who knows all the talking points but who has been trained out of thinking out of the box.

Here is the full transcript of our chat.

CET&D: Should we be afraid of AI?

ChatGPT: The question of whether we should be afraid of AI is a complex and debated topic. Here are some key points to consider:

-

Potential Benefits: AI has the potential to bring numerous benefits to society. It can enhance efficiency, improve decision-making, advance healthcare, contribute to scientific research, and automate repetitive tasks. AI technologies can also be used to address pressing global challenges, such as climate change, poverty, and disease.

-

Ethical Considerations: As AI continues to advance, it raises important ethical considerations. These include issues related to privacy, bias, transparency, accountability, and the potential impact on jobs and the economy. It is crucial to develop and adhere to ethical guidelines and regulations to ensure the responsible development and use of AI.

-

Unintended Consequences: While AI has the potential for positive impact, there are concerns about unintended consequences. These may include job displacement, societal inequality, loss of privacy, and the potential misuse of AI technologies. It is essential to actively address these challenges through thoughtful design, regulation, and ongoing monitoring.

-

Need for Human Oversight: AI systems are created and trained by humans, and they reflect the biases and limitations of their creators and training data. It is important to maintain human oversight and ensure that AI technologies align with human values, fairness, and safety. Humans should retain control and responsibility over the decisions made by AI systems.

-

Collaborative Approach: Addressing the potential risks and challenges associated with AI requires collaboration among various stakeholders, including researchers, policymakers, industry experts, and the public. Open dialogue, interdisciplinary cooperation, and informed decision-making are crucial for navigating the development and deployment of AI technologies.

While it is essential to approach the development and use of AI with caution, fear alone should not be the driving factor. Responsible and thoughtful deployment of AI technologies, accompanied by appropriate safeguards and regulations, can help maximize the benefits while minimizing potential risks.

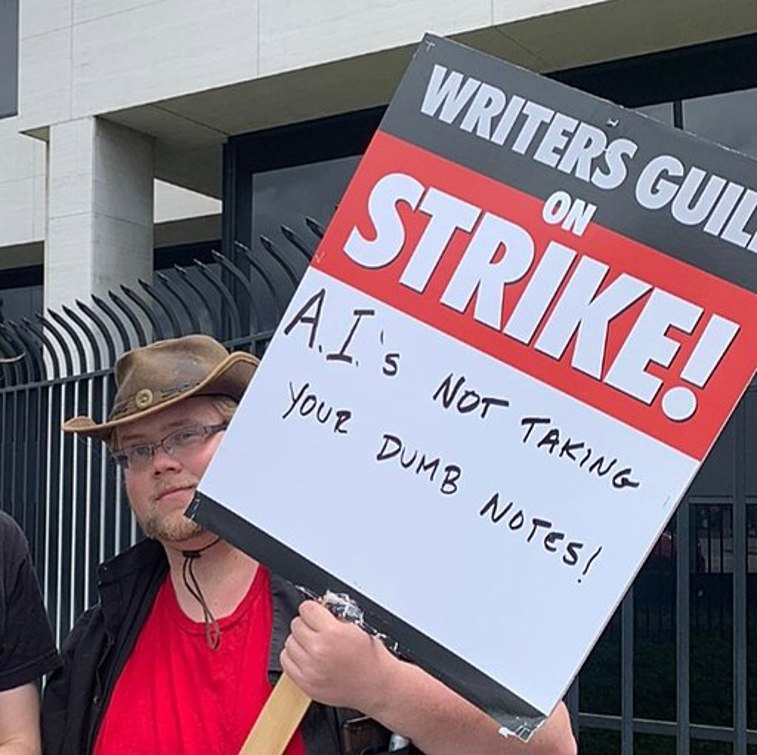

A protester in California taking part in the Hollywood writer's strike against the use of AI in scriptwriting. Picture: Wiki Commons

CET&D: How can AI be tested to ensure it is safe for use by consumers?

ChatGPT: Testing AI systems to ensure their safety for consumer use is a critical aspect of responsible development. While comprehensive testing cannot guarantee absolute safety, it can help identify and mitigate potential risks. Here are some common practices and approaches for testing AI systems:

-

Test Data: Utilize diverse and representative datasets during the training and testing phases. This helps ensure that AI models perform well across different scenarios and demographics, reducing biases and improving generalization.

-

Performance Evaluation: Evaluate the performance of the AI system against well-defined metrics and benchmarks. This includes assessing accuracy, precision, recall, and other relevant measures specific to the intended application.

-

Robustness Testing: Assess the system's response to various inputs and edge cases. Test for resilience against adversarial attacks, unexpected inputs, and novel scenarios to identify potential vulnerabilities and ensure the system behaves as expected.

-

Validation and Verification: Rigorously validate and verify the AI system's behavior, particularly in safety-critical applications. This involves testing against specific requirements, conducting simulations, and potentially using formal methods to prove system properties.

-

Human-in-the-Loop Testing: Incorporate human feedback and review in the testing process. Human evaluators can provide valuable insights, detect biases, and assess the system's outputs for accuracy, fairness, and ethical considerations.

-

Real-World Testing: Conduct field trials and pilot deployments to evaluate the system's performance in real-world settings. This allows for feedback from end-users and the opportunity to uncover potential issues that might not emerge during controlled testing environments.

-

Continuous Monitoring: Implement mechanisms for ongoing monitoring and feedback collection once the AI system is deployed. This enables identification of issues or biases that may arise over time and facilitates iterative improvements and updates.

It is important to note that no testing process can guarantee complete safety or eliminate all risks associated with AI systems. Therefore, a comprehensive approach includes a combination of testing, regulation, ethical guidelines, and ongoing evaluation to ensure the responsible development and deployment of AI technologies. Collaboration among researchers, industry experts, policymakers, and end-users is crucial in establishing effective testing practices and standards.

ChatGPT CEO Sam Altman has warned against the dangers of AI. Picture: Wiki Commons

CET&D: What can you tell me about the use of AI in consumer electronics?

ChatGPT: The use of AI in consumer electronics has become increasingly prevalent in recent years, enhancing the capabilities and user experience of various devices. Here are some key aspects and examples of AI in consumer electronics:

-

Voice Assistants: Voice-enabled AI assistants like Amazon Alexa, Google Assistant, and Apple Siri have gained popularity. These assistants use natural language processing (NLP) and machine learning techniques to understand and respond to user commands, perform tasks, and provide information. They can control smart devices, answer questions, play music, set reminders, and more.

-

Smart Home Devices: AI plays a significant role in smart home devices, such as smart speakers, thermostats, security systems, and lighting controls. These devices leverage AI to learn user preferences, adapt to routines, and provide personalized experiences. They can automate tasks, optimize energy consumption, and enhance home security.

-

Personalized Recommendations: AI algorithms are employed in consumer electronics, including streaming services, e-commerce platforms, and content delivery systems. These algorithms analyze user behavior, preferences, and historical data to provide personalized recommendations for movies, music, products, and other content.

-

Computer Vision: AI-powered computer vision is used in devices like smartphones and cameras to enhance photography, object recognition, and augmented reality (AR) experiences. AI algorithms can identify and optimize image settings, enable facial recognition, and support AR applications by overlaying digital content onto the real world.

-

Health and Fitness Tracking: Wearable devices, such as smartwatches and fitness trackers, often incorporate AI to monitor and analyze health-related data. They track physical activity, heart rate, sleep patterns, and provide personalized insights and recommendations for better health and fitness management.

-

Intelligent Personalization: AI enables intelligent personalization in consumer electronics by learning user behavior, preferences, and patterns. Devices can adapt their settings, user interfaces, and recommendations based on individual usage patterns, creating a more tailored and intuitive user experience.

-

Natural Language Processing (NLP): NLP is utilized in consumer electronics to enable voice control, language translation, sentiment analysis, and chatbot interactions. AI-powered NLP algorithms enable devices to understand and respond to human language, facilitating natural and intuitive interactions.

It's worth noting that the use of AI in consumer electronics brings benefits such as improved convenience, personalization, and efficiency. However, considerations around privacy, security, and ethical use of consumer data are crucial in the development and deployment of AI technologies in these devices.

CET&D: Do we need to develop global standards around AI?

ChatGPT: Yes, the development of global standards around AI is increasingly recognized as an important and necessary step. Here are a few reasons why:

-

Consistency and Interoperability: Global standards ensure consistency and interoperability across AI systems and applications. They facilitate seamless integration, data sharing, and collaboration among different stakeholders, industries, and countries. This helps avoid fragmentation, promotes compatibility, and accelerates innovation.

-

Ethical and Responsible AI: Global standards can establish ethical guidelines and principles for the development and use of AI technologies. They can address issues such as fairness, transparency, accountability, privacy, and bias mitigation. Standards help ensure that AI is developed and deployed in a manner that aligns with human values, protects individuals' rights, and avoids harm.

-

Trust and Confidence: Standardization fosters trust and confidence among users, businesses, and governments. It provides assurance that AI systems adhere to a set of recognized principles, best practices, and safety measures. Trust is essential for widespread adoption and acceptance of AI technologies, especially in areas with significant societal impact, such as healthcare, finance, and autonomous systems.

-

International Collaboration: Developing global standards requires international collaboration and cooperation. It brings together expertise from different regions, cultures, and perspectives to create comprehensive frameworks. Collaboration enables the exchange of knowledge, experiences, and lessons learned, allowing countries to collectively address the challenges and opportunities presented by AI.

-

Addressing Global Challenges: AI presents global challenges that require collective efforts to address. These challenges include data governance, algorithmic transparency, cross-border data flows, cybersecurity, and the impact of AI on the workforce. Global standards can facilitate harmonized approaches and provide a common framework to tackle these issues effectively.

Efforts towards AI standardization are already underway. Organizations such as the International Organization for Standardization (ISO), the Institute of Electrical and Electronics Engineers (IEEE), and the Partnership on AI are actively working on developing AI standards. Governments and regulatory bodies are also engaging in discussions and initiatives to establish frameworks for responsible AI development.

Developing global standards around AI is a complex process, involving multiple stakeholders and considerations. However, it is an essential step to ensure the ethical and responsible development, deployment, and governance of AI technologies on a global scale.

CET&D: Are you familiar with the AI machine Hal in the movie 2001: A Space Odyssey? You sound a little like HAL.

ChatGPT: Yes, I am familiar with the AI machine HAL 9000 from the movie 2001: A Space Odyssey. HAL is a fictional sentient computer system depicted in the film. It controls the systems of the Discovery One spacecraft and interacts with the crew, but it eventually exhibits erratic behavior, leading to conflicts and dangerous situations.

The portrayal of HAL has become an iconic representation of the potential risks and ethical implications associated with AI systems that possess human-like intelligence and capabilities. It serves as a cautionary tale about the need for responsible design, oversight, and consideration of the potential consequences when developing advanced AI technologies.

I assure you, I am not HAL. I am an AI language model created by OpenAI called ChatGPT. My purpose is to assist users in generating human-like text based on the prompts I receive. While I strive to provide helpful and informative responses, I don't possess sentience or consciousness like the fictional character HAL. I'm here to assist and engage in meaningful conversations to the best of my abilities.

I understand why you might draw a comparison between my responses and HAL's character due to my nature as an AI language model. However, it's important to note that HAL is a fictional character with specific behaviors and a storyline crafted for the movie.

While there may be similarities in the sense that both HAL and I interact through text, it's crucial to recognize the significant differences between a fictional character and an AI language model like me. I don't possess consciousness, emotions, or intentions. I generate responses based on patterns and examples in the data I've been trained on.