DXOMARK has more than two decades of experience testing image quality with its groundbreaking and user-centric camera image quality suite for smartphones, which was followed more recently by extensive audio, display and battery tests.

The application of scientific rigor and in-the-field evaluation is what makes DXOMARK’s scores and rankings such reliable indicators of the user experience. So now, in a natural extension of what it already does with smartphones (as well as cameras, lenses and speakers), DXOMARK engineers have turned their expertise to laptops. They have created a testing suite specifically designed to evaluate the user experience when video calling, music listening, and video watching on laptops. Why laptops? Because these devices, whether used at work or at home, have successfully maintained their status as the critical bridge between work and play.

A changed landscape

One of the factors that led to the development of this protocol was the outbreak in early 2020 of the Covid-19 pandemic, which drastically changed lives and the way people work virtually overnight. All at once, videoconferencing wasn’t just an optional and occasional activity: Office workers around the world found themselves attending online meetings on a daily basis. The use of collaborative software like Zoom, Microsoft Teams, and Slack has nearly doubled from 2018 to 2022.1

With lockdowns and bans on traveling, families and friends had to resort to video calling to be able to virtually celebrate special events or to simply keep in touch. At the time, laptops weren’t known for having high-quality built-in cameras, mainly because they were so rarely used. With demand for external webcams outstripping supply, people were left using their laptop’s built-in camera, and the image quality varied greatly among the devices.

Photo credit: DXOMARK

Even though quarantines and restrictions have eased, the business landscape today remains changed. Going into the office is now optional or occasional for many workers, meaning that videoconferencing is still a principal way for professionals to collaborate, discuss, and share.

The pandemic showed just how important the laptop has become, not only as a necessary work-from-home tool, but also a personal device for watching videos or listening to music while working. As a result, consumers have started paying more attention to the quality of their laptops’ performance in these areas.

Elements of the Laptop protocol

In light of this new work environment, DXOMARK engineers from the Camera, Audio and Display teams got together to create a protocol that could help manufacturers and end-users alike make good choices when developing or purchasing a laptop.

To better understand consumer laptop preferences and usages, we conducted a survey recently with YouGov that showed laptops were used mostly for web browsing (76%), office work (59%) and streaming video (44%), and listening to music (35%).

Laptops can do a myriad of things, but since web browsing is highly reliant on internet connectivity and office work does not require high-end specifications for camera, display or audio, we chose to focus this laptop protocol on two use cases: video calling, and music and video playback.

Video calls rely on the camera, audio and display systems of the laptop to work together seamlessly, so that a correctly exposed and focused image is properly displayed, while the speaker’s voice is intelligible and coherent. For example, everyone wants to be able to easily watch the content on their laptop screens, and most screens are perfectly legible in indoor conditions. But how easily can you see screen content in bright conditions, especially if your screen is highly reflective? How well can you discern someone’s facial features when they are backlit?

Photo credit: Pixabay

Music & Video use case assesses the display and audio playback functionalities of a laptop when watching videos and movies, or when listening to music.

The protocol also evaluates the elements of these use cases separately. In Camera, video call quality evaluation assesses attributes such as face exposure in different light conditions, skin-tone rendering and motion blur in multiple situations such as a single formal office meeting to an informal group call from the sofa.

In Audio, capture, playback, and duplex quality during video calls are evaluated, as is playback for listening to music and watching videos. In video calls, these evaluations are related to the main audio quality attributes, as well as the specifics related to video calls such as Duplex audio. For example, timbre, dynamics, spatial volume and artifacts are the specific attributes measured. Those attributes are also applied to audio playback. People want to clearly hear words emanating from the speakers or be able to pick out specific instruments or riffs in a piece of music. This is pretty easy to do if you’re in a quiet room by yourself. But how easily can you understand what someone is saying if there’s a lot of background noise and crosstalk in your location, their location, or both?

In Display, we evaluate the quality of the video rendering while running a video call or watching a movie. Readability is central to our test, with two elements: the brightness range suitability to lighting environment and a qualitative and quantitative evaluation of reflection. Can you enjoy watching a movie on your laptop when you’re in a low-lit bedroom or outside on a terrace? This readability test is relevant for both video calls and multimedia use cases. We also test color rendering, brightness and gamma when playing both SDR and HDR content.

With the building blocks of the protocol set, the testing was ready to begin.

Our testing philosophy

First, our laptop testing applies the typical DXOMARK “recipe,” which consists of a combination of measurements in our labs as well as real life tests. Second, we test under rigorously scientific conditions both inside and outside the lab, using industry-leading equipment and following internationally recognized procedures for our objective and perceptual measurements.

The following examples show how we test laptops.

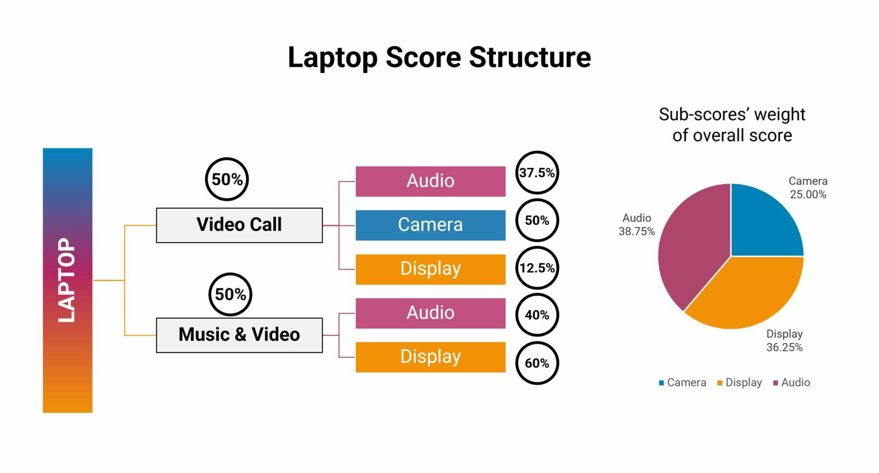

How is the laptop score built?

The diagram below helps to illustrate the use cases and features that we test for each use case and shows the overlap between the two, notably for the display attributes of reflectance and color fidelity, and for audio playback quality. As you can see, the video calling use case evaluates many of the same attributes as DXOMARK tests for in its smartphone camera protocol and includes audio capture attributes. By contrast, the Music & Video use case does not include camera testing nor audio capture evaluation; instead, it relies on the attributes we use to score smartphone displays.

Photo credit: DXOMARK

Both use cases are accorded nearly equal weight when determining an overall laptop quality score. But as with our other protocols, understanding how well a given laptop performs in each use case is important. If you rarely use your laptop for video calls but watch a lot of movies on it, you will want to pay more attention to your device’s multimedia capabilities. If you’re spending many hours each week in calls with colleagues or clients, then your device’s handling of HDR10 video content may not matter so much to you.

What types of laptop products do we cover?

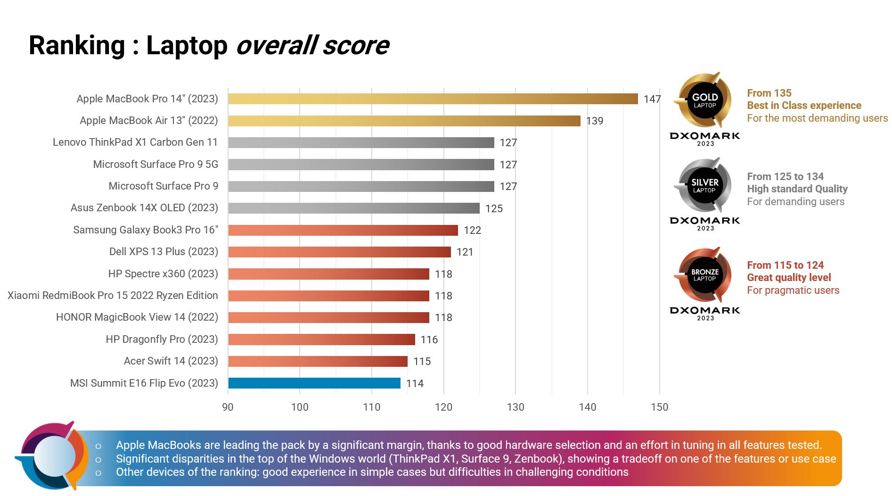

We debut the Laptop protocol with a selection of 14 main laptops from 11 different brands that are currently available on the market. We focused our first round of tests on laptops that were at the higher end of the market because these types of devices tend to include the latest technologies and have the potential to offer the best-in-class experience in video calling as well as music and video streaming.

We plan to vary the kinds of laptops we test, and our choices will consider various operating systems as well as the evolution of the latest technology.

Ranking results and insights

We observed significant differences in performance for laptops compared to what we are accustomed to seeing on smartphones.

For example, a laptop is equipped with a built-in front-facing camera, just like a smartphone. However, we observed that in most cases, the single laptop camera was less performant than the front camera on a smartphone, particularly in the important areas of face exposure, color, clarity, tone, sharpness, details, parameters that are important when videoconferencing.

Compared to smartphones, laptop displays bring a lot more diversity than smartphones, notably in the type of panel offered. For example, while featuring a matte or glossy panel does not directly impact the score, their implementation could impact readability, which is a key element in our protocol.

When it comes to the video experience, HDR panels still remain a niche even on many of today’s top products. Even when equipped with HDR panels, we observed disparities because devices were not necessarily optimally tuned to benefit from the HDR panels’ full potential. This was particularly true for Windows laptops, which showed strong clippings in the highlights of HDR videos at maximum brightness, therefore limiting the user experience. In other cases, laptops without HDR panels were still able to decode basic HDR formats thanks to tone-mapping adaptation.

Photo credit: DXOMARK

In the world of laptop performance, it is rare to find a device that stands out solely for its audio performance since most manufacturers face important tradeoffs for speaker and microphone placement and the challenges of form factor and maximum weight. However, in this regard, our laptop results revealed that when it came to video calls, there were fewer disparities in quality among the 14 devices we tested. Video call scores for these laptops were relatively high across the board, and all the devices passed.

A more detailed look at the results shows that Apple laptops led the ranking, providing excellent performances across both the Video call and Music & Video use cases. In Video call, the two tested MacBooks showed accurate exposure even in difficult conditions, as well as great intelligibility in capture and playback. Their performance in playback and display brought them as well to the top of the rankings in the Music & Video use case.

Among the distinctive laptop performers operating on Windows, the Lenovo ThinkPad X1 delivered a great overall experience in both use cases, reaching the Top 3 positions in Camera and Display while showing an average performance in Audio tests, despite strong hardware capabilities.

The Microsoft Surface Pro 9 and Surface Pro 9 5G managed a very good performance, particularly in video call, where it scored the best among all the Windows laptops we tested. Both devices’ scores, however, were impaired by their performances in Display.

The Asus Zenbook 14X OLED performed well in Audio, even managing to beat the MacBook, but it showed an average performance in Display, where it lacked readability, and in Camera, where it showed insufficient face exposure in challenging situations such as backlit scenes.

Outside of the laptops mentioned, the other laptops provided a good experience in simple cases, but showed difficulties in challenging conditions.

This article was originally published on DXOMARK's website and is only reproduced here with their kind permission.